Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Join the event that trusts business leaders for almost two decades. VB Transform brings together people who build a real business AI strategy. Learn more

French Ai Darling Mistral keeps the new versions to come this summer.

Only a few days after announcing his National Cloud Optimized Mistral Calculation ServiceThe well -funded company has published an update of its open source model of parameter 24b Mistral SmallGoing from a release of 3.1 to 3.2-24B instruct-2506.

The new version is based directly on Mistral Small 3.1, aimed at improving specific behaviors such as the following instruction, output stability and the call for robustness. Although global architectural details remain unchanged, the update introduces targeted refinements that affect internal assessments and public references.

According to Mistral IA, small 3.2 is better to adhere to specific instructions and reduces the probability of infinite or repetitive generations – a problem occasionally seen in previous versions when managing long or ambiguous prompts.

Similarly, the functional call model has been upgraded to support more reliable tools for using tools, especially in frames like VLLM.

And at the same time, it could work on a configuration with a single NVIDIA A100 / H100 80GB GPU, considerably opening the options for companies with close resources and / or budgets.

Mistral Small 3.1 was announced in March 2025 As a flagship open version in the 24B settings. It offered complete multimodal capacities, multilingual understanding and long -context treatment of tokens up to 128k.

The model has been explicitly positioned against owner peers like GPT-4O Mini, Claude 3.5 Haiku and Gemma 3-IT-and, according to Mistral, surpassed them on many tasks.

Small 3.1 has also focused on effective deployment, with operating allegations of the inference at 150 tokens per second and the support for disk use with 32 GB of RAM.

This version came with basic control points and instructions, offering flexibility for fine adjustment through areas such as legal, medical and technical fields.

On the other hand, small 3.2 focuses on surgical improvements in behavior and reliability. It is not intended to introduce new capacities or architecture changes. Instead, it acts as a maintenance version: cleaning of edge cases in the output generation, tightening the conformity of the instructions and refining the interactions of the system.

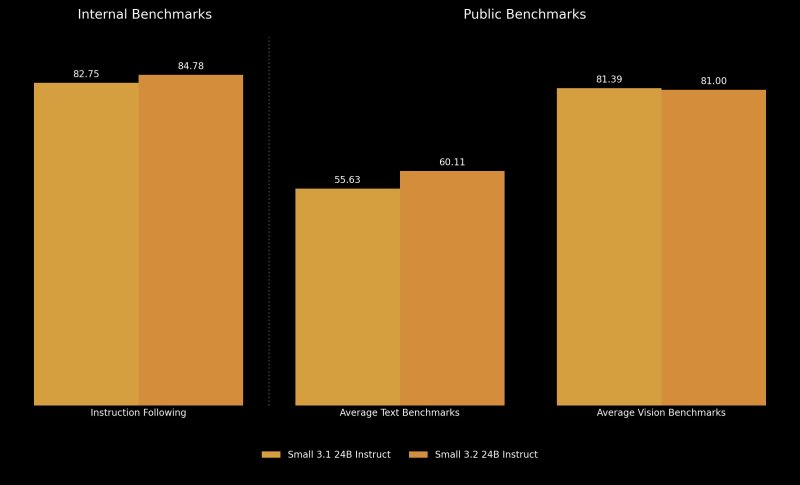

The instructions monitoring references show a small but measurable improvement. The internal precision of Mistral increased from 82.75% in small 3.1 to 84.78% in small 3.2.

Likewise, performance on external data sets like Wildbench V2 and Arena Hard V2 have improved considerably – Wildbench increased by almost 10 percentage points, while the arena has more than doubled, from 19.56% to 43.10%.

The internal metrics also suggest a reduced repetition of the exit. The infinite generation rate dropped by 2.11% in a small 3.1 to 1.29% in a small 3.2 – almost a 2 × reduction. This makes the model more reliable for application creation developers who require coherent and delimited responses.

The performance through the text and the coding references have a more nuanced image. Small 3.2 showed gains on Humaneval Plus (88.99% to 92.90%), the MBPP pass @ 5 (74.63% to 78.33%) and Simpleqa. It has also modestly improved MMLU pro and mathematics results.

Vision benchmarks remain mainly consistent, with slight fluctuations. Chartqa and Docvqa saw marginal gains, while AI2D and Mathvista fell by less than two percentage points. Average vision performance has slightly decreased by 81.39% in a small 3.1 to 81.00% in small 3.2.

This is aligned with the declared intention of Mistral: little 3.2 is not a overhaul of the model, but a refinement. As such, most benchmarks are in the expected variance, and certain regressions seem to be compromises for targeted improvements elsewhere.

However, as a user and influencer @ Chatgpt21 published on x: “He worsened on Mmlu”, which means the massive reference to understand multitasking language, a multidisciplinary test with 57 questions designed to assess large LLM performance in the fields. Indeed, small 3.2 marked 80.50%, slightly less than 80.62% of 3.1.

The small 3.1 and 3.2 are available under the Apache 2.0 license and are accessible via the popular. AI code sharing repository Face (itself a startup based in France and New York).

Small 3.2 is supported by frames like VLLM and Transformers and requires approximately 55 GB of RAM GPU to operate in BF16 or FP16 precision.

For developers who seek to create or serve applications, system prompts and examples of inference are provided in the model repository.

While Mistral Small 3.1 is already integrated into platforms like Google Cloud Vertex AI and is planned for deployment on Nvidia Nim and Microsoft Azure, Small 3.2 currently seems limited to self-service access via the embraced face and direct deployment.

Mistral Small 3.2 may not move the competitive positioning in the space of the open weight model, but it represents Mistral’s commitment to the iterative refinement of the model.

With notable improvements in reliability and task management – in particular around the accuracy of the instruction and the use of tools – Small 3.2 offers a user experience specific to developers and companies that rely on the Mistral ecosystem.

The fact that it is done by a French startup and in accordance with the rules and regulations of the EU such as the GDPR and the EU AI law also make it attractive for companies working in this part of the world.

However, for those looking for the biggest jumps in reference performance, small 3.1 remains a reference point – especially since in some cases, like MMLU, small 3.2 does not outdo its predecessor. This makes the update more than an option focused on stability than a pure upgrade, depending on the case of use.