Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Nvidia Deplores his AI tokens in data centers and what he calls AI factories around the world and the company announcement Today, his Blackwell chips lead AI references.

NVIDIA and its partners accelerate the training and deployment of new generation AI applications that use the latest progress in training and inference.

NVIDA Blackwell architecture is designed to meet the increased performance requirements for these new applications. In the latest MLPERF training round – The 12th since the introduction of the reference in 2018 – The NVIDIA AI platform delivered the largest performance on each reference and propelled each result subjected to the most difficult tight test of the reference (LLM): LLAMA 3.1 405B of pre -treatment.

The NVIDIA platform was the only one to submit results on each MLPERF V5.0 training reference – highlighting its exceptional performance and versatility on a wide range of IA workloads, LLMS covering SMMs, recommendation systems, multimodal LLM, detection of objects and graphic neurles.

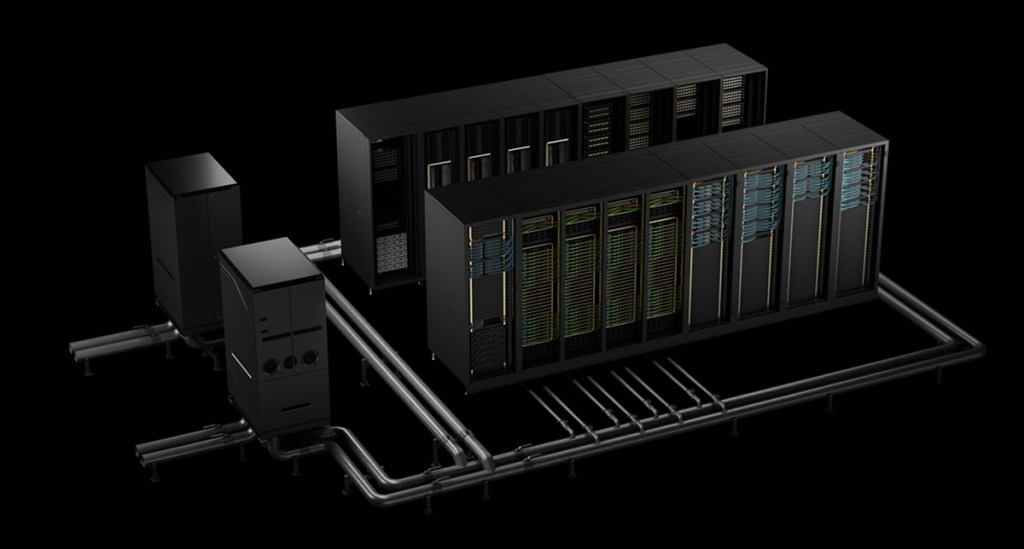

The scale submissions have used two AI superordinators fed by the Nvidia Blackwell platform: Tyche, built using NVIDIA GB200 NVL72 systems at Rack, and NYX, based on NVIDIA DGX B200 systems. In addition, Nvidia collaborated with Coreweave and IBM to submit GB 200 NVL72 results using a total of 2,496 GPU Blackwell and 1,248 CPU Nvidia Grace.

On the new Llama 3.1 405b pre-training reference, Blackwell delivered 2.2 times more performance compared to the previous generation architecture on the same scale.

On the reference FINEDING LLAMA 2 70B LORA, the NVIDIA DGX B200 systems, supplied by eight GPU Blackwell, delivered 2.5 times more performance compared to a submission using the same number of GPUs in the previous round.

These jumps of performance highlight the progress of Blackwell architecture, including racks cooled by high density liquid, 13.4 TO of coherent memory by rack, NVIDIA NVIDIA and NVIDIA NVILK endconnect interconnection technologies for the scale. In addition, innovations in the NVIDIA Nemo Framework software battery increases the new generation multimodal LLM training bar, essential to put agentic AI applications on the market.

These AI origin requests fed by AI take place one day in the AI factories – the engines of the agentic AI economy. These new applications will produce precious tokens and information that can be applied to almost all areas of industry and academic fields.

The Nvidia Data Center platform includes GPUs, CPUs, fabrics and high-speed networks, as well as a wide range of software such as Nvidia Cuda-X libraries, the Nemo Framework, Nvidia Tensort-LLM and Nvidia Dynyno. This highly adjusted set of hardware and software technologies allows organizations to train and deploy models faster, considerably accelerating value time.

The NVIDIA partner’s ecosystem has largely participated in this MLPERF round. Beyond the submission with Coreweave and IBM, other convincing submissions came from Asus, Cisco, Giga Computing, Lambda, Lenovo Quanta Cloud Technology and Supermicro.

The first MLPERF training submissions using GB200 were developed by MlCommons Association with more than 125 members and affiliates. Its metric of time to train ensures that the training process produces a model that meets the accuracy required. And its standardized reference execution rules guarantee comparisons of apple to apple performance. The results are evaluated by peers before publication.

Dave Salvator is someone I knew when he was part of the technological press. Now, he is director of accelerated IT products in the accelerated IT group of Nvidia. In a press briefing, Salvator noted that the CEO of Nvidia, Jensen Huang, talks about this notion of the types of scale laws for AI. They include pre-training, where you essentially teach the knowledge of the AI model. It starts from zero. It is a heavy calculation lifting which is the backbone of AI, said Salvator.

From there, Nvidia moves in post-training scaling. This is where the models go somehow to school, and it is a place where you can do things like a fine setting, for example, where you bring a different data set to teach a pre-formulated model that has been formed to a point, to give it an additional domain knowledge of your particular data set.

And then finally, there is a scaling or a reasoning in time, or sometimes called long reflection. The other term it passes is agentic ai. It is AI that can really think and reason and solve problems, where you essentially ask a question and get a relatively simple answer. The scaling and the reasoning of the test time can actually work on much more complicated tasks and provide a rich analysis.

And then there is also a generative AI which can generate content according to a necessary basis which can include translations of text summary, but also visual content and even audio content. There are many types of scaling that take place in the AI world. For benchmarks, NVIDIA focused on pre-training and post-training results.

“This is where AI begins what we call the AI investment phase. And then when you enter the inference and deployment of these models, then essentially generating these tokens, this is where you start to get your return to your investment in AI,” he said.

The MLPERF reference is in its 12th round and dates back to 2018. Consortium support has more than 125 members and it was used both for inference and training tests. The industry considers references to be robust.

“As I am sure that many of you know it, sometimes performance claims in the AI world can be a bit of the West West. Mlperf seeks to bring a certain order to this chaos,” said Salvator. “Everyone must do the same amount of work. Everyone is bound to the same standard in terms of convergence. And once the results are submitted, these results are then examined and verified by all other submerges, and people can ask questions and even defect results. ”

The most intuitive metric around training is the duration of the formation of an AI model formed in what is called convergence. This means hitting a specified level of precision. This is a comparison of apple apples, said Salvator, and he is constantly taking into account workloads.

This year, there is a new workload Llama 3.140 5B, which replaces the workload Chatgpt 170 5B which was in the reference index previously. In the benchmarks, Salvator noted that Nvidia had a number of files. The NVIDIA GB200 NVL72 AI factories are fresh from the manufacturing factories. From a generation of chips (hopper) to the next (Blackwell), Nvidia has seen an improvement of 2.5 times for the results of the image generation.

“We are still quite early in the life cycle of Blackwell products, we therefore expect to obtain more performance over time of Blackwell architecture, while we continue to refine our software optimizations and new workloads, frankly heavier, enter the market,” said Salvator.

He noted that Nvidia was the only company to have submitted entries for all benchmarks.

“The great performances we achieve come from a combination of things. It is our fifth generation NVLink and NVSWITCH offering up to 2.66 times more performance, as well as other general architectural kindness in Blackwell, as well as our in progress software optimizations that make this performance possible,” said Salvator.

He added: “Due to the heritage of Nvidia, we have been known for a long time as GPU guy. We certainly make excellent GPUs, but we went from a flea company not only to be a system company with things like our DGX servers, to the construction of our whole partners, to the construction of whole data centers, which are now conceptions to ensure that our partners occur, infrastructure, which we then now call the factories of AI.